Enterprise Observability Stack

Building production-grade, self-hosted observability with Prometheus, Grafana, and Loki—achieving full-stack visibility at a fraction of cloud costs

The Challenge

The platform had zero observability across 20 microservices running on Kubernetes. Critical operational questions remained unanswered:

- "Which service is causing the 500 errors?"

- "Why did the pod restart 5 times in the last hour?"

- "What's our Kafka consumer lag right now?"

- "Are we hitting CPU/memory limits?"

- "Where are the logs for that failed deployment?"

The mandate: Build enterprise-grade observability in-house at minimal cost while maintaining production reliability

Business Impact: Cloud observability solutions (Datadog, New Relic, Splunk) would cost $50K-150K/year for our scale—prohibitively expensive for the budget

The Solution: Self-Hosted Observability Platform

I architected and deployed a complete self-hosted observability stack using open-source tooling, achieving enterprise-grade monitoring at <5% of cloud costs.

Prometheus + Thanos

Time-series metrics with S3 long-term storage

Grafana

25+ custom dashboards

Loki + Promtail

Microservices mode with S3

Architecture

Enterprise Observability Stack Architecture

Metrics Flow: Services (expose /metrics) + Exporters → Prometheus (scrape) → Thanos → S3

Logs Flow: Services (stdout/stderr) → Promtail (DaemonSet collector) → Loki (aggregate)

Traces Flow: Services (OpenTelemetry instrumentation) → Tempo (distributed trace storage)

Visualization: Grafana queries Prometheus, Loki, and Tempo for unified observability (metrics + logs + traces)

Alerting: Prometheus → Alertmanager (routing + inhibition) → Teams (environment-specific channels)

Core Stack Components

1. Prometheus (Metrics Collection)

Configuration: Kustomize base + environment overlays (dev, qa, preprod, prod)

Service Discovery: Kubernetes SD for automatic service detection

Long-term Storage: Thanos sidecar → S3 for historical data

Retention: 15 days local, unlimited S3 storage

2. Exporters (Data Sources)

Deployed comprehensive exporters for full-stack visibility:

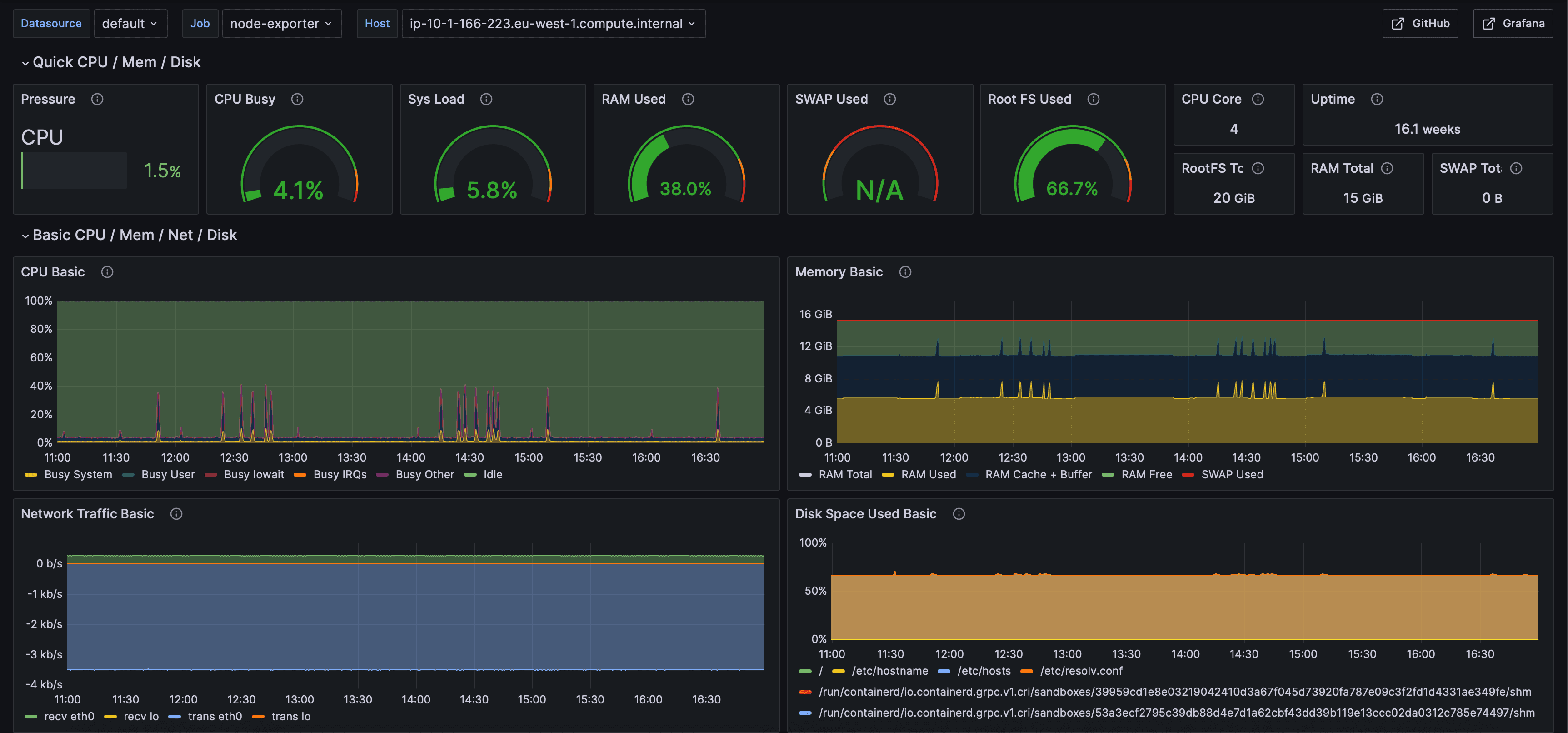

- •Node Exporter: CPU, memory, disk, network from EC2 instances

- •Kube State Metrics: Kubernetes object state (pods, deployments, nodes)

- •Kafka Exporter: MSK consumer lag, partition offsets, topic metrics

- •Postgres Exporter: Database connections, query performance

- •CloudWatch Exporter: AWS service metrics (RDS, MSK, ELB)

3. Loki (Log Aggregation)

Architecture: Microservices mode (distributor, ingester, querier, query-frontend, compactor)

Storage: S3 backend for cost-effective log storage

Collection: Promtail DaemonSet scraping pod logs

Indexing: Label-based indexing (namespace, pod, container)

4. Alertmanager (Alert Routing)

Integration: Teams webhooks via Power Automate workflows

Smart Routing: Environment-specific channels (Dev → business hours, Prod → 24/7)

Alert Inhibition: Suppress low-severity alerts when critical alerts fire

Grouping: Batch alerts by namespace/service to reduce noise

Alert Rules Implemented

Business Intelligence Dashboards

Created 10+ custom Grafana dashboards providing comprehensive visibility from infrastructure to business metrics.

Platform Overview Dashboards

- •Kubernetes Cluster Health: Node status, pod health, resource utilisation

- •Infrastructure Metrics: CPU, memory, disk, network across all nodes

- •Message Queue Health: Kafka consumer lag, partition metrics, throughput

Service-Specific Dashboards

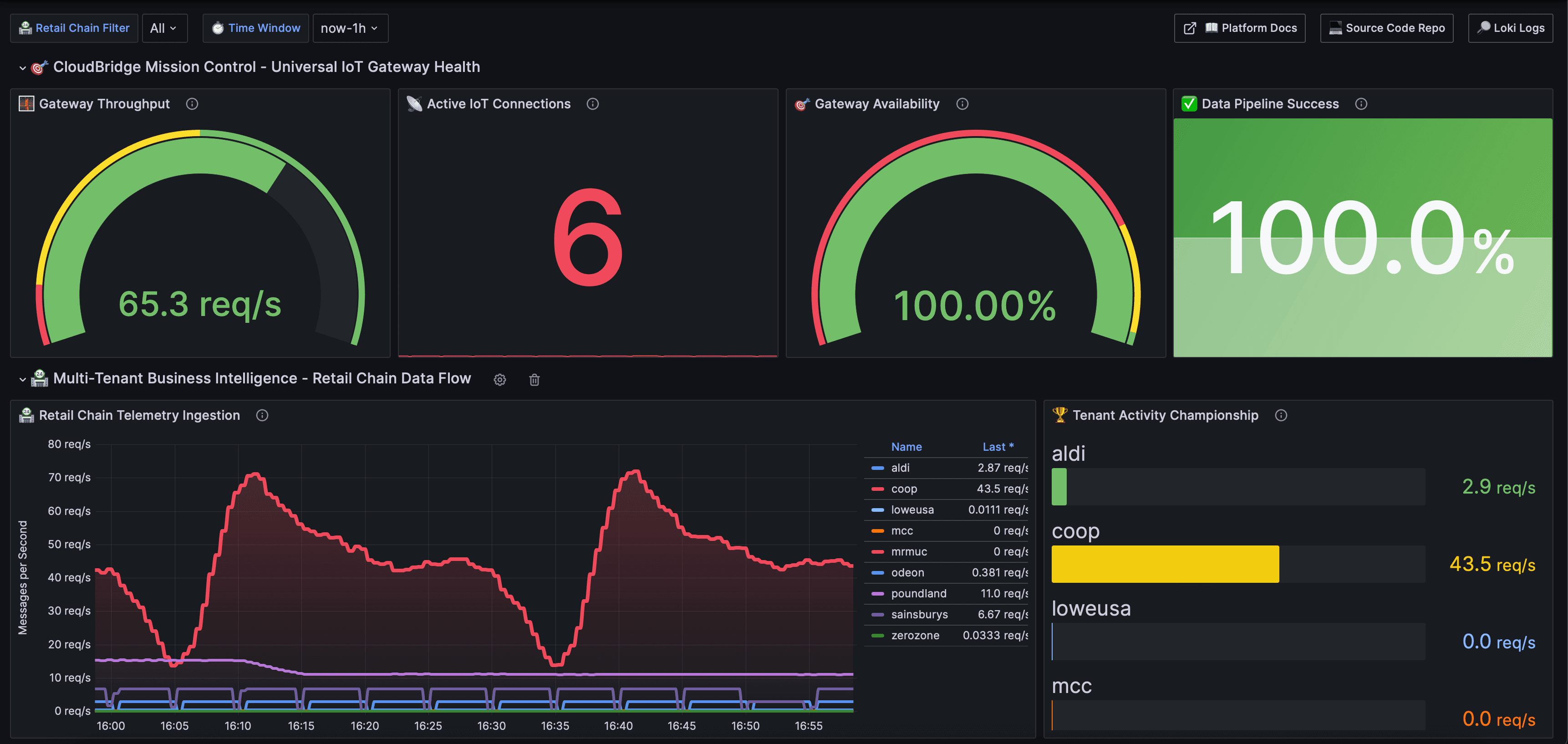

- •IoT Gateway Intelligence: Throughput (req/s), active connections, vendor performance ranking

- •Multi-Tenant Analytics: Tenant activity championship, ingestion rates by tenant

- •Integration Performance: Vendor system performance, $750K+ annual savings tracking

IoT Gateway Mission Control

Real-time gateway throughput (61.9 req/s), active IoT connections, availability tracking, and data pipeline success rates.

Kafka Consumer Lag & Topic Health

Comprehensive Kafka monitoring showing consumer lag trends by group and topic, partition-level lag visualisation.

Node Exporter Infrastructure Metrics

System-level monitoring via Node Exporter showing CPU pressure, memory usage, disk I/O, network traffic.

Operational Runbooks

Created comprehensive runbooks for every alert, enabling rapid incident response and knowledge sharing.

Runbook Structure

Clear description of what triggered the alert

Decision tree with kubectl commands, log queries, metric queries

Copy-paste commands for typical fixes (restart pods, scale deployment)

Who to contact if standard resolution doesn't work

# Check pod status kubectl get pods -l app=service-a -n production # View recent logs kubectl logs service-a-abc123 --tail=100 # Check events kubectl describe pod service-a-abc123 -n production # Common fixes: kubectl rollout restart deployment/service-a -n production kubectl delete pod service-a-abc123 -n production

Business Impact

Total cost (vs $50K-150K for cloud solutions)

Custom Grafana dashboards built from scratch

Alert rules covering infrastructure and apps

Prometheus exporters deployed across stack

Service coverage across all microservices

Environments with consistent observability

Technical Highlights

- → Deployed complete self-hosted stack (Prometheus, Grafana, Loki, Alertmanager) with Kustomize

- → Configured Loki microservices architecture for scalable log aggregation

- → Integrated Thanos for long-term metrics storage in S3 (cost-effective historical data)

- → Deployed 6+ specialised exporters for comprehensive infrastructure monitoring

- → Created 50+ alert rules with smart routing and inhibition logic

- → Instrumented all 20 microservices with custom Prometheus metrics

- → Authored comprehensive runbooks for rapid incident response

- → Achieved 95%+ cost savings vs commercial observability solutions

Want Similar Results?

I'd love to bring this same approach to your platform engineering challenges. Let's discuss how I can help your team.